Introduction

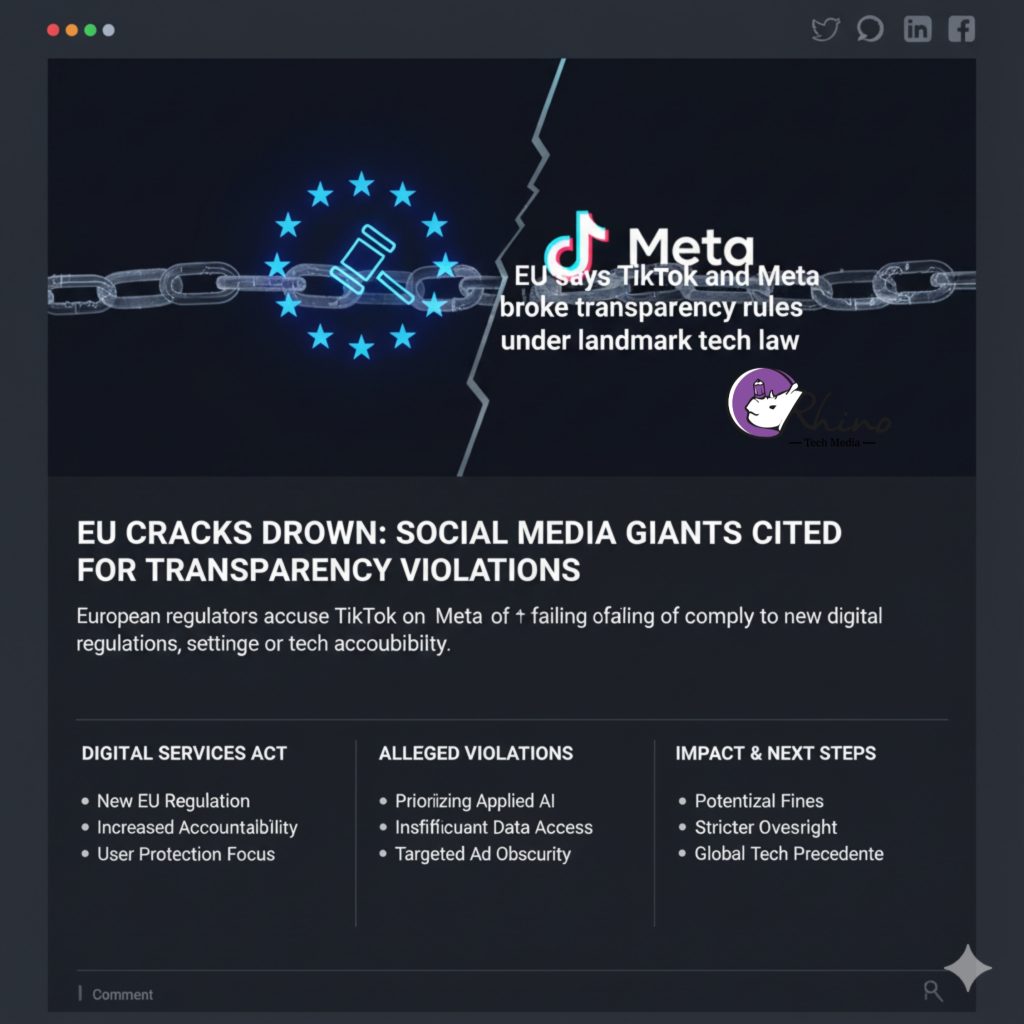

In a significant development in digital regulation, the Digital Services Act (DSA) of the European Commission (EC) has been wielded in what many interpret as a “show-the‐teeth” moment for Big Tech. On 24 October 2025, the Commission publicly issued preliminary findings that two of the largest online platforms — Meta Platforms, Inc.(owner of Facebook and Instagram) and TikTok (owned by ByteDance) — have breached transparency obligations under the DSA.

This essay will: (1) explain the relevant legal framework and what obligations the DSA imposes; (2) summarise the specific findings against Meta and TikTok; (3) analyse the implications of these findings for the companies, for EU digital regulation, and for users; and (4) offer reflections on what this signifies (and what remains uncertain).

1. The Legal Framework: The DSA and Its Transparency Obligations

The DSA is the EU’s landmark regulatory regime for online platforms, especially those designated as Very Large Online Platforms (VLOPs). The law entered into force in 2024 for many of the obligations, aiming to address risks posed by major platforms: dissemination of illegal content, lack of transparency in moderation, algorithmic opacity, lack of access for researchers, and insufficient oversight of systemic risks.

Among its key transparency obligations:

- VLOPs must provide adequate access to data (public data) for researchers to examine systemic risks and the impact of the platforms (including on minors, on health, on public discourse).

- Platforms must provide simple, accessible mechanisms for users to report illegal content, appeal moderation decisions, and challenge actions.

- Platforms must avoid “dark patterns” or design elements that mislead or confuse users or make it onerous to exercise rights under the platform’s and EU’s rules.

- The EC can impose fines up to 6% of global annual turnover for confirmed breaches.

In short: the DSA enshrines transparency and accountability as duties, not optional commitments. As the EC put it in its press release: “Our democracies depend on trust. That means platforms must empower users, respect their rights, and open their systems to scrutiny. The DSA makes this a duty, not a choice.”

2. The Findings Against Meta and TikTok

The EC’s preliminary findings against Meta and TikTok (published 24 October 2025) highlight several alleged failures:

2.1 Failure to provide adequate researcher access to public data

The Commission found that both TikTok and Meta placed burdensome procedures and tools on researchers requesting access to platform data — leaving them with “partial or unreliable data,” inhibiting their ability to assess users’ exposure to illegal or harmful content (including minors). For example: “The Commission’s preliminary findings show that Facebook, Instagram and TikTok may have put in place burdensome procedures and tools for researchers to request access to public data.”

2.2 Meta’s ineffective user‐mechanisms for reporting illegal content and appeals

Specifically for Meta (Facebook and Instagram), the findings claim the company did not provide a sufficiently simple mechanism for users to notify illegal content (such as child sexual abuse material, terrorist content) and to effectively challenge moderation decisions. The EC points to “dark patterns” in Meta’s interfaces that make the reporting process confusing or discouraging.

2.3 TikTok’s angle: consistency with privacy vs transparency tension

TikTok responded by saying that while it had made “substantial investments” in data sharing and allowed many research teams access, the transparency requirements in the DSA appear to clash with the General Data Protection Regulation (GDPR). The company argues regulators must clarify how these obligations reconcile.

2.4 Potential consequences

These are preliminary findings—not yet final decisions—but if confirmed, the EC has the power to impose fines up to 6% of global annual turnover.

3. Implications and Significance

3.1 For the companies (Meta & TikTok)

- These findings mark a major regulatory exposure for the two companies, signalling that the EU is ready to apply the DSA rigorously.

- The threat of large fines increases commercial risk. Even if compliance is achieved, reputational and operational adjustments will be required (e.g., improving data access for researchers; redesigning user‐reporting mechanisms).

- Meta, in particular, faces scrutiny over its moderation systems and interface design. For TikTok, the issue may be strategic: balancing transparency with data‐privacy obligations.

- The companies now have a chance to engage with the EC, respond to the findings, implement changes, and potentially reduce penalties. The process remains ongoing.

3.2 For EU digital regulation and platform governance

- The move underscores that the DSA is more than a symbolic framework—it is enforceable and operational.

- It sets precedent: large platforms operating in Europe must expect elevated scrutiny around transparency, data access, user rights, and systemic risk management.

- It highlights the tension between transparency obligations and other regulatory regimes (notably GDPR): how to enable researcher access while protecting personal data rights remains a structural question.

- The action may influence global standards: as other jurisdictions (UK, US, India) look at platform regulation, the EU’s enforcement may act as a benchmark.

3.3 For users, researchers, society

- For researchers: the findings signal that access to platform data is getting regulatory backing; if compliance is improved, research into platform impacts (on minors, on health, on public discourse) may become more feasible and less obstructed.

- For users: the emphasis on simple reporting and appeals means that platforms might need to elevate user‐rights and make mechanisms more user‐friendly—at least in the EU.

- For society: the investigation reflects broader worries that platforms carry systemic risks (misinformation, harmful content, addictive design), and that transparency is a key lever for accountability. As the EC said, allowing researchers access is an “essential transparency obligation … as it provides public scrutiny into the potential impact of platforms on our physical and mental health.”

4. Reflections: What Does This Mean and What’s Unclear

4.1 Meaning

- The action can be seen as a watershed moment: major tech companies must treat the DSA as a credible enforcement threat, not a “nice to have” compliance exercise.

- It may prompt platforms to proactively redesign transparency, user reporting, and data‐sharing systems, potentially diffusing the “dark box” nature of large social platforms.

- It emphasises that accountability isn’t only a matter of content removal or moderation, but also of enabling scrutiny (by researchers, users, society) of how platforms operate.

4.2 What is still uncertain

- These are preliminary findings. The companies can respond; final decisions may differ in scope, findings, or penalties.

- Whether the EC will find against both companies on all counts, or only on select obligations, remains to be seen.

- The practical tension between data‐access obligations (DSA) and data-protection/privacy obligations (GDPR) is not yet fully resolved, especially for a global platform operating in multiple jurisdictions (TikTok’s argument).

- How this will play out globally (e.g., outside the EU) is uncertain: will similar enforcement actions emerge elsewhere, or will platforms treat this as an “EU special” case?

- Whether platforms will ultimately meaningfully change their design practices (to remove dark patterns, improve user‐reporting, appeals) or simply adopt minimal compliance filters is an open question.

Conclusion

The European Commission’s preliminary determination that Meta and TikTok have breached transparency obligations under the Digital Services Act is a landmark moment. It puts the DSA into operational effect, signals serious regulatory risk for large platforms, and underscores the importance of transparency, researcher access, user‐rights, and design accountability in the digital-platform world. For Meta and TikTok, the path ahead involves navigating regulatory pressure, refining systems, and potentially facing substantial penalties. For society, it offers the prospect of more “open windows” into how major platforms affect our lives—though much depends on how strictly enforcement is carried through and how robust the platforms’ responses prove to be.